I get asked a lot about my process, and it’s a fair question and one I have really struggled to articulate succinctly—because what I make isn’t just about the final image. My medium is the workflow itself. The art is born in the layering, the iterations, and the back-and-forth collaboration.

More Than Just a Prompt

When people think of generative tools like Midjourney, they often imagine typing in a prompt, hitting enter, and—boom—you’ve got art. And for many people, that is the process and without question completely valid. At the very core of this technology, language is art. The way someone shapes words to pull images from the machine is a creative practice in its own right.

But here’s the thing: it’s not up to anyone else to decide what is or is not art. Mediums don’t get to define that, gatekeepers don’t get to define that. Art is personal. It comes from the soul, from the need to create. If someone spends time and energy making something that brings them joy, that is art.

To dismiss another person’s work because you don’t like the tool they used isn’t about art—it’s about judgment. And when judgment crosses into cruelty, it’s just bullying dressed up as an opinion, something we have far too much of right now. And truly, that says more about the person doing the judging than the person making the work. I don’t tolerate bullying in any form, towards me or anyone else.

A Supportive Community

One of the most beautiful things about working with generative tools is the community that has formed around them. The AI art community is collaborative, generous, and deeply supportive. Artists share techniques, cheer each other on, and lift each other up. There’s a camaraderie here that feels rare and refreshing. But outside the community it can be quite the opposite.

This vitriol isn’t new to me. When Adobe products first hit the scene, they were called “cheating.” Digital artists were ridiculed and dismissed much like generative tools but now Adobe tools are industry standard. Generative art is simply the next wave of ire for gatekeepers who want to control who gets to be called an artist. And just like before, they’ll be proven wrong.

If you’ve been told to resist this technology, I’ll say it loudly: you are missing out. Especially if you’re neurodivergent. I believe with my whole heart that this technology is particularly powerful for certain brain types. The ability to iterate quickly, to collaborate with a system that responds in real time, to experiment without limits—that is life-giving for creative minds wired to think differently.

There is a whole conversation about ethics and the environment and I am not unaware of either but as someone infinitely inspired by the world around me and has done a deep dive on the environmental impact of generative art I can speak on both of these topics with anyone wanting to discuss it.

My Process, Layer by Layer

So what does my workflow actually look like? Let me walk you through a project.

One note before we dive in, I use the Niji algorithm of Midjourney, it was designed for Anime so you will notice that in this exercise. I like it much more than the default Midjourney as I have less control over faces and there is a certain cartoonish pop surrealism aesthetic I am chasing that Niji gets.

Step 1: The Prompt

It begins with language. For this piece, I started with:

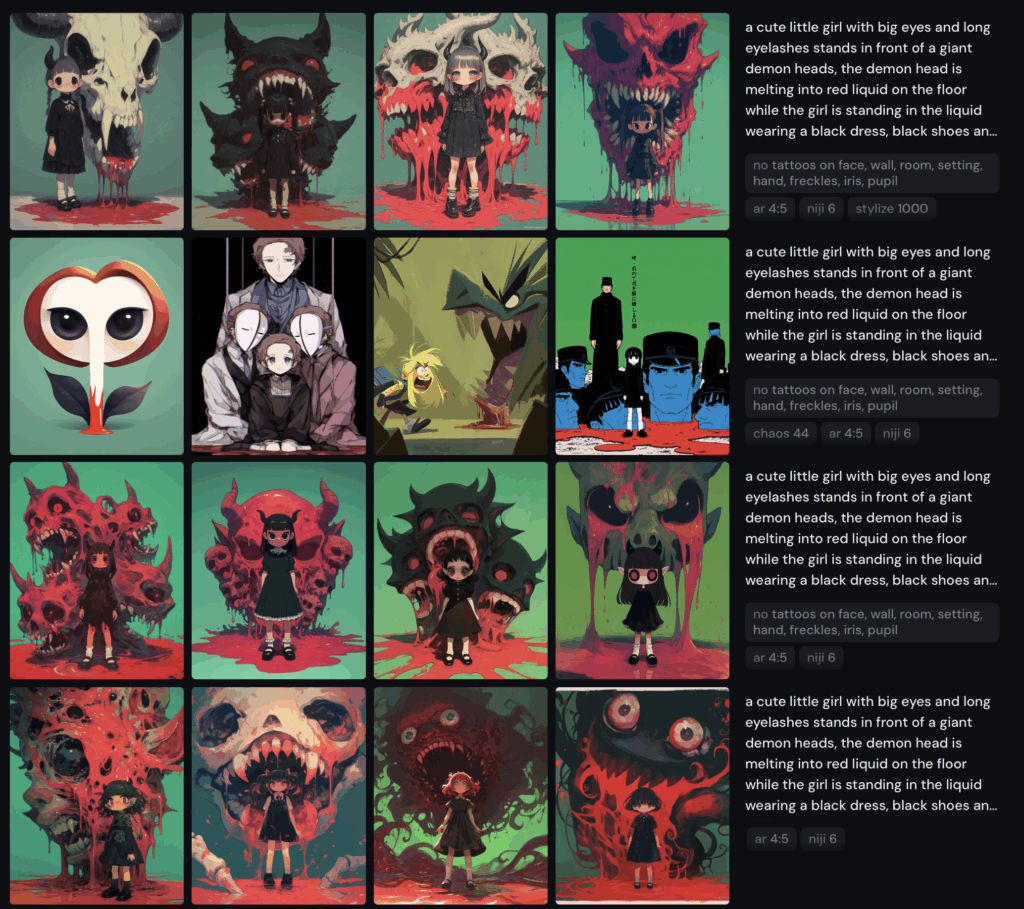

a cute little girl with big eyes and long eyelashes stands in front of a giant demon head, the demon head is melting into red liquid on the floor while the girl is standing in the liquid wearing a black dress, black shoes and white socks. Surreal scene with green background. Full-body portrait

The prompt is about 5% of the process. It’s the spark, not the fire.

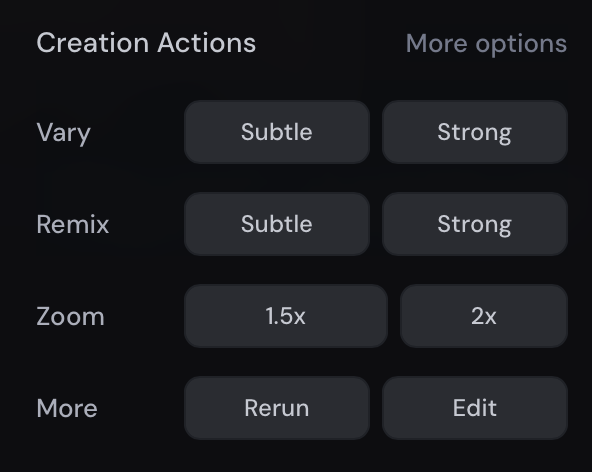

Step 2: Creation Actions

Next, I use Midjourney’s Creation Actions panel—Vary Strong, Vary Subtle, Remix, Zoom, Edit. These are not just buttons; they’re levers for exploration.

- Vary Strong is my go-to, letting me follow a thread across multiple generations.

- Remix lets me add or pull out uploads midstream to test new directions.

- Zoom I use the 1.5x often to create natural space around a subject

- Edit lets me make larger edits to the composition

This is where the branching paths begin.

Step 3: Default Results vs. Parameters

The first outputs (the bottom row above) are “default Niji.” From there, I start shaping with parameters:

- –no exceptions → Remove unwanted elements, but also shift the aesthetic.

- Chaos → Pushes results away from defaults, generating more unusual options.

- Stylize → Lets the algorithm interpret with more artistic freedom. I usually max it out at 1000.

- Aspect Ratio → I generally work in 4:5 these days

These aren’t minor tweaks—they change the whole feel of the image and this alone is such an exciting process.

Step 4: Upload Stacking

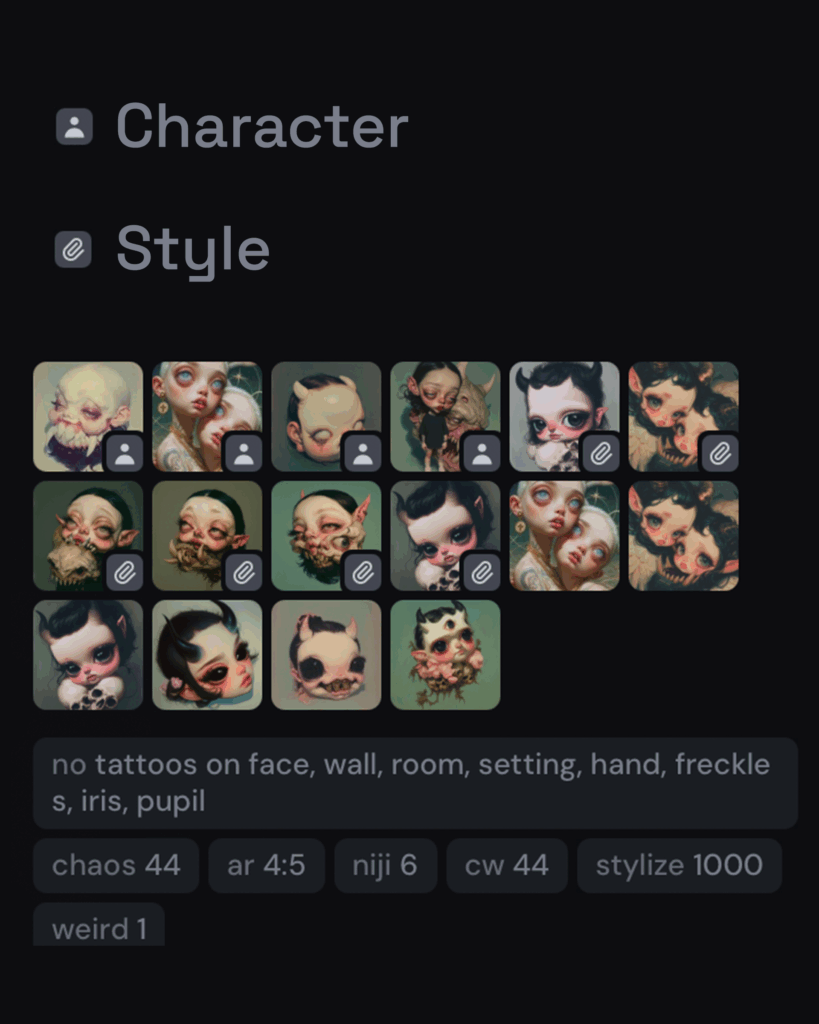

Then comes stacking uploads: character images and style references. I assign Character Weight, which tells the algorithm how much to respect those uploads. Too high, and it’s rigid. Too low, and it drifts. The sweet spot is where the magic happens.

This is where my voice really enters the work, because I’m feeding Midjourney images that are part of my own creative vocabulary. I have created every one of these images. My work is incredibly layered. I create pieces in Adobe Illustrator and Photoshop that I will layer in this way and the repurposing of my own art is a profoundly satisfying aspect of this process.

Step 5: Iteration as Medium

Between every stage, I run Vary Strong again and again. Sometimes I’ll go through hundreds of images before a direction feels right. Iteration isn’t busywork—it’s the medium itself. This is where the art really happens.

I’ll Remix along the way too, swapping styles or characters in and out to chase new ideas. Each fork in the road is another chance to discover something unexpected.

Step 6: Magnific AI

Once I’ve downloaded an image, I run it through Magnific AI. I almost always use the Soft Photo mode with these settings:

- Creativity: 1

- HDR: 1

- Resemblance: 6

- Fractality: 0

- Engine: default

Magnific is subtle but essential. My style leans into a kind of cartoonish hyper-realism, and Magnific provides a final, delicate punch-up—especially in the skin textures. It’s only about 5% of the process, but it makes all the difference.

Step 7: Adobe Finishing

The last stage happens in Photoshop and Illustrator.

- Illustrator is where I place and refine text, taking advantage of precise vector control.

- Photoshop is for cleanup—masking, color correction, and final polish.

I resisted using Adobe at first when I started. I was so focused on learning generative tools that it felt like “cheating” to bring Adobe into the mix. But that was ridiculous. These are tools I’ve used professionally for over 20 years, and integrating them has made my art fully my own.

In fact, returning to Adobe in this context has been freeing. I’d long lost the fire for creating digital art for myself after years of doing it professionally. Midjourney reignited that creative spark, and by combining it with the skills I’ve built over decades, I’ve become both a better digital artist and better at my job.

Why the Workflow Matters

This isn’t “AI making art.” This is a hybrid digital workflow where human intention and machine imagination meet. My role is not passive. Every variation, every rejection, every remix, every upload—it’s all active choice.

The workflow is the art. The final image is just the evidence.

The Future of Art

I make pop surrealism with machines. Midjourney sparks it, Magnific polishes it, Adobe refines it, and I pull it all together. But beyond my personal process, what excites me is the bigger picture: we are standing at the edge of a new era of creativity.

The future of art isn’t about clinging to old definitions or shutting people out. It’s about embracing new tools, new workflows, and new voices. Art has always evolved—and every time, it’s the gatekeepers who fall behind.

Generative art doesn’t diminish creativity. It multiplies it. And if you ask me, that’s punk rock.